Human Intelligence, Artificial Intelligence: Teenager vs. Machine in SEEBURGER Enrichment Project

Machines can learn things. However, just like people, they need to be trained in their specific tasks. SEEBURGER is working together with the Hector-Seminar, a German organisation which runs extra-curricular STEM projects to support gifted and able pupils. This project regularly offered by SEEBURGER lets schoolchildren experiment with multimodal machine learning (ML) models for automatic AI image analysis. Learn more about this SEEBURGER enrichment project and discover how high-achieving pupils are learning to experiment with TensorFlow models, integrate them into scenarios and create a dialog application.

As comic book series such as Arrow and Flash have shown, superhuman powers are very useful if you need to save humankind. However, without talented, brainy programmers and researchers behind the scenes who know what they’re doing, our heroes wouldn’t have a chance against the forces of evil. Ergo – Superheroes need brainboxes to constantly save their butts.

Of course, even the brightest kids need to learn what options are out there so that they can channel their abilities in a meaningful way. Working in cooperation with the Hector-Seminar, SEEBURGER has started a regular enrichment project to give gifted and able pupils the opportunity to gain further experience in STEM.

Machine learning and automatic AI image analysis

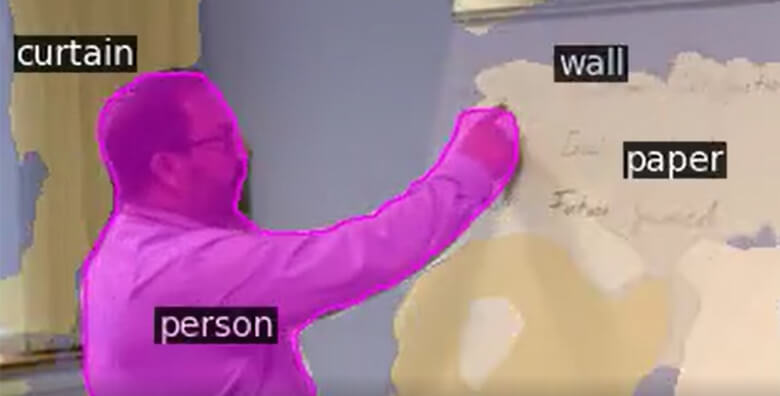

In the project currently offered by SEEBURGER, pairs of students who have qualified for the programme learn to train machines to recognize and classify image content using multimodal models. Body movements, hand gestures, recognising symbols and numbers, a particular type of place, the number of people in a group and the distance between them could all be useful information for automatically interpreting a series of pictures, such as from a webcam. The ability to recognise whether someone is wearing a face mask (correctly) is, at the time of writing, also a very useful feature.

This is all made possible by trained ML models, which are freely available and can also be integrated into embedded IoT and browser-based applications. ML models can be used to identify people, animals, objects, and movement in images, videos, and live streams.

Although this wasn’t a condition of joining the programme, it was really useful that the two participants in the first project, running September 2020 – July 2021 – had already done some programming in Python or JavaScript. This upped the fun factor from the very beginning. And the pupils got to borrow some really cool ‛toys’…

The project blurb

Using an NVidia Nano computer with some help from a Coral accelerator, in a project spanning the academic year, pupils gain experience in using TensorFlow models for various modalities. They then consider how they could use these models in various scenarios.

Inspired by YouTube films, games and books, participants then brainstorm how to integrate this technology into business life, in manufacturing environments, in logistics centres or in software interactions.

Participants then focus on one TensorFlow model, understanding then altering its source code. This could involve identifying a gesture which suggests a particular situation is occurring – or about to occur.

At the end of this project, participants will have created at least one multi-step dialogue to use in a particular scenario.

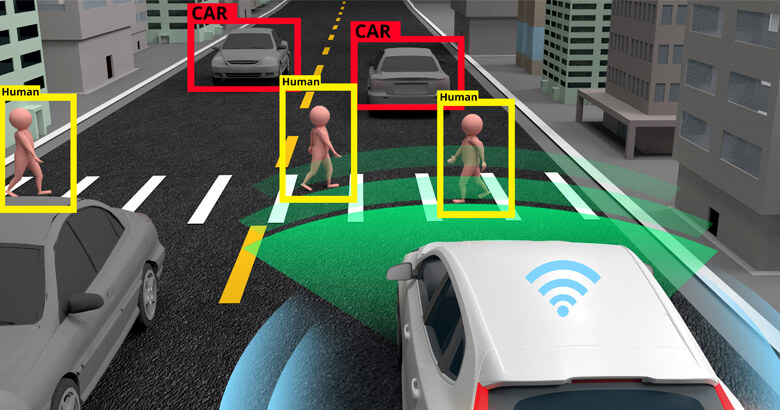

Participants are then ready to start a second project, this year due to run from September 2021 to July 2022. In this follow-on project, pupils will have the opportunity to experiment with a number of machine learning models, and how they can be used to automatically interpret selected video streams. In this project, pupils will again use JupyterLab to gain experience in using various trained machine learning models. These can be applied to either YouTube film or the webcam and stereo camera which can be attached to the pupils‘ Nano computers.

Students then task the models with automatically identifying elements in a live stream or a series of pictures. This may involve recognising and counting cars, trucks, people, animals or other objects. There are a number of questions students will be confronted with: What are similarities and differences between the visual inputs? What can be easily recognised – and what is tricky? Are there patterns? Are faces and posture clear? Can we calculate size, distance, speed or direction of movement?

Due to current COVID-19 restrictions, the pupils were allowed to take the equipment home to work with. Every three weeks, they took part in a video conference with members of the SEEBURGER R&D department to discuss what they had been doing and what they had discovered.

During the project, which ran for the first time from September 2020 – July 2021, the participants were able to gain a good understanding of how multi-modal machine learning models could be trained to automatically recognise and identify elements in pictures and film. At the same time, they also gained a wealth of practical experience in using the hardware and software needed for this.

There are huge benefits from AI image analysis. In the health service, AI is successfully being used to automatically analyse x-rays. Social media, online shops and other online platforms use the technology to categorise newly posted photos so that they can be found more easily. In cartography, satellite images are automatically analysed with AI so that maps can be updated.

There are of course two sides to a coin, and the American company Clearview AI, which collected people’s photos from the internet without their knowledge or consent to make a biometric database is a current example of the darker side. However, where Batman is, the Joker will never be far behind. With this in mind, it’s imperative to help talented young people to learn to use their skills creatively and well so that they make a valuable contribution to society. This doesn’t necessarily mean they will save the world – although, who knows?

The Hector-Seminar

The Hector-Seminar is a programme for able and gifted pupils in Germany’s North Baden region. Every week, pupils research and work on questions and issues in STEM (Science, Technology, Engineering & Maths). To be accepted onto the programme, school children sit a central entrance test. The programme starts at age 11 and runs until participants finish their schooling at 18. SEEBURGER came on board in September 2020 with the project described above.

Thank you for your message

We appreciate your interest in SEEBURGER

Get in contact with us:

Please enter details about your project in the message section so we can direct your inquiry to the right consultant.

Written by: Dr. Johannes Strassner

Dr. Johannes Strassner, Head of Research, is responsible for application-oriented research on innovation of SEEBURGER's business integration technologies and solutions. The current focus is on Machine Learning, Big Data, API, Blockchain and IoT. Johannes Strassner received his doctorate at the University of Koblenz-Landau for his work on ‘Parametrizable Human Models for Dialogue Environments’. He joined SEEBURGER in 2011 after research activities at the Massachusetts Institute of Technology and the Fraunhofer-Gesellschaft.